Shiny: Performance tuning with future & promises - Part 1

In our previous article about Shiny we shared our experiences with load testing and horizontal scaling of apps. We showed the design of a process from a proof of concept to a company-wide application.

The second part of the blog series focuses on the R packages future & promises, which are used for optimizations within the app. These facilitate the drastic reduction of potential waiting times for the user.

In order to cover this topic in as much detail as possible, the first part of the article refers to the theory and operation of Shiny and future & promises. It also explains the asynchronous programming techniques on which the package functions are based. In the second part, a practical example is used to show how the ideas for optimization can be implemented.

Shiny workflow - connections and R processes

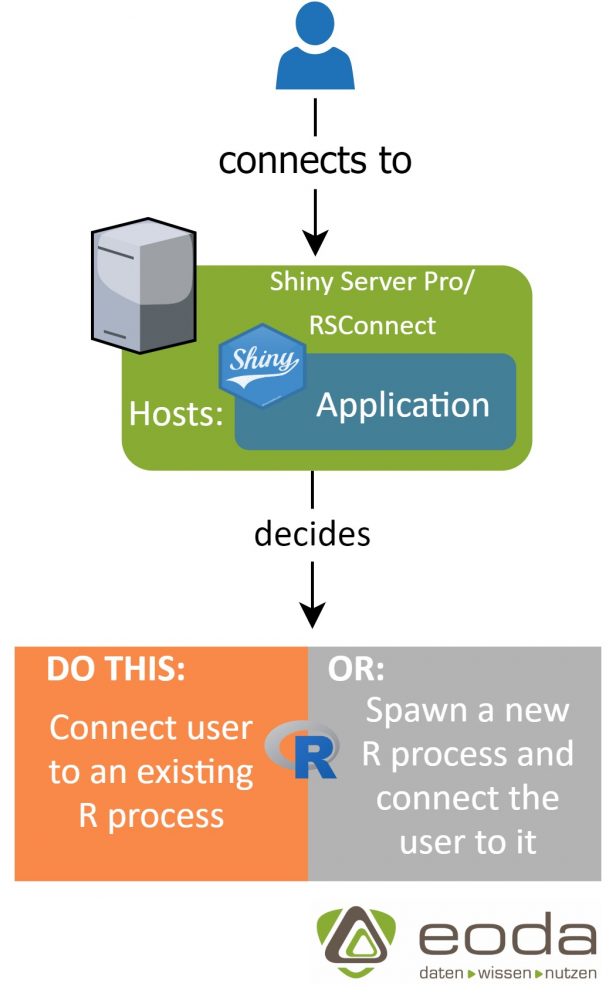

The following figure shows the procedure by which user access to a Shiny app is carried out:

For each user access to a Shiny app, the server decides if the user is either added to an existing R process or a new R process is started to which the user will be connected. How exactly the server handles this decision can be controlled separately for each application via certain tuning parameters.

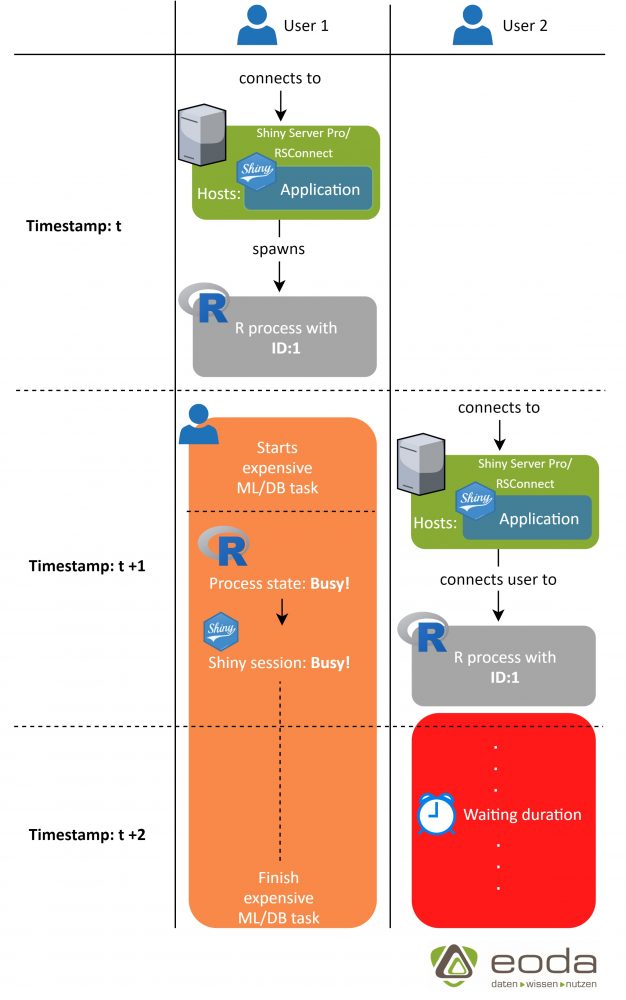

R is single-threaded, i.e. all commands within a process are executed sequentially (not in parallel). For this reason, the process highlighted in orange may cause delays in the execution of the app. However, this depends on the use of other users of the application, that are connected to the process. The following graphic illustrates this usage.

Since user 2 is connected to the same R process as user 1, he has to wait until the task started by user 1 is finished to use the app. Since it is usually impractical (or impossible) to assign each user a separate R session for the Shiny app, this leads to the above mentioned possibilities, which are realized with future & promises and will be discussed in more detail below.

future & promises - Asynchronous programming in R

Anyone who already made experience with other programming languages will probably have encountered the term “asynchronous programming” in one form or another. The idea behind it is simple: From a task list of a process a sublist of complex tasks is outsourced in order to keep the initial process reactive. In our example, one of these “process blocking tasks” would be the machine learning/database task started by user 1. The R packages future & promises implement this programming paradigm for R, which only allows sequential (“synchronous”) programming natively. The workflow is divided into different classes (so-called “plans”):

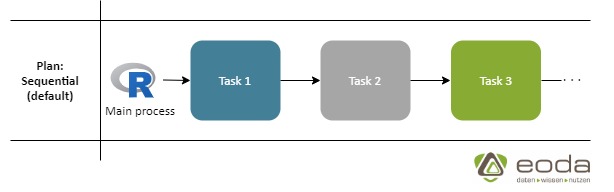

The reference operation set reflects the “normal” way in which R works. Processes execute tasks one after the other, i.e. later tasks must wait for their predecessors to complete.

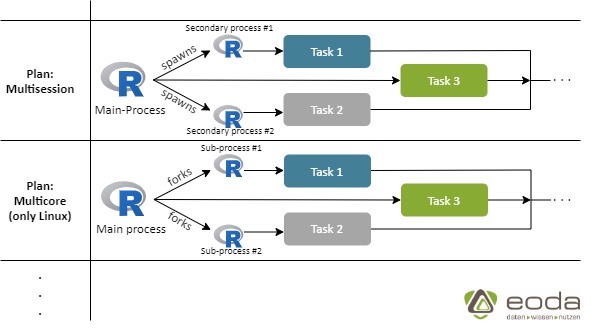

Tasks 1 & 2 from the asynchronous schedules shown above are each outsourced to secondary/sub-processes so that the main process remains free and task 3 can be processed. The difference is that “Multisession” starts two new R processes on which the tasks are executed. Multicore on the other hand branches the main process into two sub-processes, which is only possible under Linux. E.g. further plans allow outsourcing to distributed systems.

Optimization: Inter-session vs. Intra-session

In order to better understand the steps in the following practical article, two types of optimization are presented, into which the term in-app performance tuning can be divided. These have a significant influence on the optimization process and are therefore essential for a common understanding.

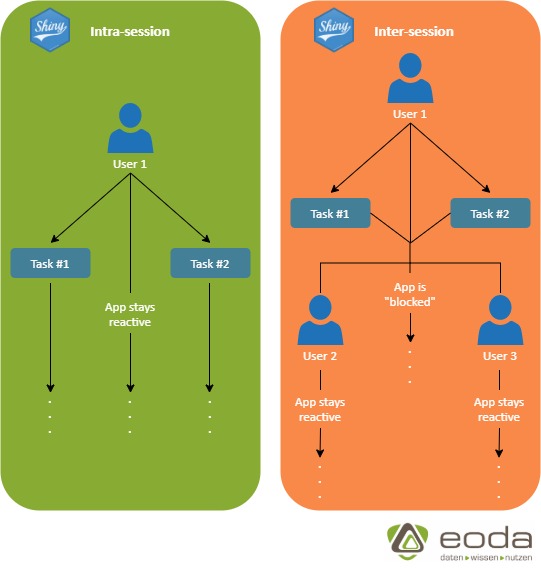

As shown in the graphic below, performance tuning is divided into inter-session and intra-session optimization. If an app is optimized with regard to intra-session performance, the system tries to reduce the waiting time for the user running the current application.

The packages future & promises are designed in the Shiny context for inter-session optimization. This is focused on keeping the app reactive for all other users accessing the app at the same time. The user who starts the task will still have to wait for the task to complete before he can continue using the app.

Conclusion & Outlook

The theory behind the way Shiny works and the asynchronous programming paradigms is an important step towards understanding how future & promises works. Furthermore, deeper insights into the architecture of the systems sharpen the view with regard to necessary optimization processes and at which points this can be applied. In the second part of this article we will see how the wealth of information can be cast into shape using an intuitive syntax and how this can influence the development of an app.

We are the experts for developing Shiny applications and building productive IT infrastructures in the data science context. Do you have questions on these topics? Then we would be pleased to be at your disposal as contact persons.