Big data was one of the biggest topics on this year’s useR conference in Albacete and it is definitely one of today’s hottest buzzwords. But what defines “Big Data”? And on the practical side: How can big data be tackled in R?

Which data is considered as big?

Hadley Wickham, one of the best known R developers, gave an interesting definition of Big Data on the conceptual level in his useR!-Conference talk “BigR data”. In traditional analysis, the development of a statistical model takes more time than the calculation by the computer. When it comes to Big Data this proportion is turned upside down. Big Data comes into play when the CPU time for the calculation takes longer than the cognitive process of designing a model.

Jan Wijffels proposed in his talk at the useR!-Conference a trisection of data according to its size. As a rule of thumb: Data sets that contain up to one million records can easily processed with standard R. Data sets with about one million to one billion records can also be processed in R, but need some additional effort. Data sets that contain more than one billion records need to be analyzed by map reduce algorithms. These algorithms can be designed in R and processed with connectors to Hadoop and the like.

The number of records of a data set is just a rough estimator of the data size though. It’s not about the size of the original data set, but about the size of the biggest object created during the analysis process. Depending on the analysis type, a relatively small data set can lead to very large objects. To give an example: The distance matrix in hierarchical cluster analysis on 10.000 records contains almost 50 Million distances.

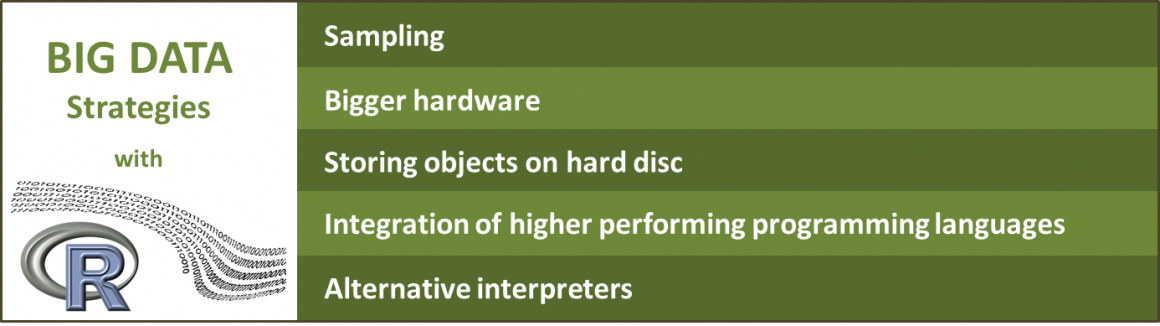

Big Data Strategies in R

If Big Data has to be tackle with R, five different strategies can be considered:

Sampling

If data is too big to be analyzed in complete, its’ size can be reduced by sampling. Naturally, the question arises whether sampling decreases the performance of a model significantly. Much data is of course always better than little data. But according to Hadley Wickham’s useR! talk, sample based model building is acceptable, at least if the size of data crosses the one billion record threshold.

If sampling can be avoided it is recommendable to use another Big Data strategy. But if for whatever reason sampling is necessary, it still can lead to satisfying models, especially if the sample is

- still (kind of) big in total numbers,

- not too small in proportion to the full data set,

- not biased.

Bigger hardware

R keeps all objects in memory. This can become a problem if the data gets large. One of the easiest ways to deal with Big Data in R is simply to increase the machine’s memory. Today, R can address 8 TB of RAM if it runs on 64-bit machines. That is in many situations a sufficient improvement compared to about 2 GB addressable RAM on 32-bit machines.

Store objects on hard disc and analyze it chunkwise

As an alternative, there are packages available that avoid storing data in memory. Instead, objects are stored on hard disc and analyzed chunkwise. As a side effect, the chunking also leads naturally to parallelization, if the algorithms allow parallel analysis of the chunks in principle. A downside of this strategy is that only those algorithms (and R functions in general) can be performed that are explicitly designed to deal with hard disc specific datatypes.

“ff” and “ffbase” are probably the most famous CRAN packages following this principle. Revolution R Enterprise, as a commercial product, uses this strategy with their popular “scaleR” package as well. Compared to ff and ffbase, Revolution scaleR offers a wider range and faster growth of analytic functions. For instance, the Random Forest algorithm has recently been added to the scaleR function set, which is not yet available in ffbase.

Integration of higher performing programming languages like C++ or Java

The integration of high performance programming languages is another alternative. Small parts of the program are moved from R to another language to avoid bottlenecks and performance expensive procedures. The aim is to balance R’s more elegant way to deal with data on the one hand and the higher performance of other languages on the other hand.

The outsourcing of code chunks from R to another language can easily be hidden in functions. In this case, proficiency in other programming languages is mandatory for the developers, but not for the users of these functions.

rJava, a connection package of R and Java, is an example of this kind. Many R-packages take advantage of it, mostly invisible for the users. Rcpp, the integration of C++ and R, has gained some attention recently as Dirk Eddelbuettel has published his book “Seamless R and C++ Integration with Rcpp” in the popular Springer series “UseR!”. In addition, Hadley Wickham has added a chapter on Rcpp in his book “Advanced R development”, which will be published early 2014. It is relatively easy to outsource code from R to C++ with Rcpp. A basic understanding of C++ is sufficient to make use of it.

Alternative interpreters

A relatively new direction to deal with Big Data in R is to use alternative interpreters. The first one that became popular to a bigger audience was pqR (pretty quick R). Duncon Murdoc from the R-Core team preannounced that pqR’s suggestions for improvements shall be integrated into the core of R in one of the next versions.

Another very ambitioned Open-Source project is Renjin. Renjin reimplements the R interpreter in Java, so it can run on the Java Virtual Machine (JVM). This may sound like a Sisyphean task but it is progressing astonishingly fast. A major milestone in the development of Renjin is scheduled for the end of 2013.

Tibco created a C++ based interpreter called TERR. Beside the language, TERR differs from Renjin in the way how object references are modeled. TERR is available for free for scientific and testing purposes. Enterprises have to purchase a licensed version if they use TERR in production mode.

Another alternative R-interpreter is offered by Oracle. Oracle R uses Intel’s mathematic library and therefore achieves a higher performance without changing R’s core. Besides from the interpreter which is free to use, Oracle offers Oracle R Enterprise, a component of Oracles “Advanced analytic” database option. It allows to run any R code on the database server and has a rich set of functions that are optimized for high performance in-database computation. Those optimized function cover – beside data management operations and traditional statistic tasks – a wide range of data-mining algorithms like SVM, Neural Networks, Decision Trees etc.

Conclusion

A couple of years ago, R had the reputation of not being able to handle Big Data at all – and it probably still has for users sticking on other statistical software. However, today are a number of quite different Big Data approaches available. Which one fits best depends on the specifics of the given problem. There is not one solution for all problems. But there is some solution for any problem.